Unlocking the Power of combining Data Warehouse & Lakehouse in a Fabric Medallion Architecture

Ever wondered how to harness the strengths of both a Data Warehouse and a Lakehouse in a unified architecture? Let's explore the possibilities:

The Data Warehouse excels in transforming and serving data in T-SQL.

The Lakehouse excels in ingesting and transforming data using Spark runtime (Python, Scala, PySpark, R).

But how can we combine booths ?

Imagine a scenario where the robust capabilities of both are seamlessly combined. Behold the Data Warehouse & Lakehouse example Architecture.

General decision points for this architecture:

Transforming data with Spark notebooks

T-SQL DDL/DML capability is required for End users (Data Warehouse-exclusive feature)

Consumption requirements from Data Warehouse endpoint for certain reporting tools or processes

Developer team skill set is primarily Spark.

The Medallion Architecture

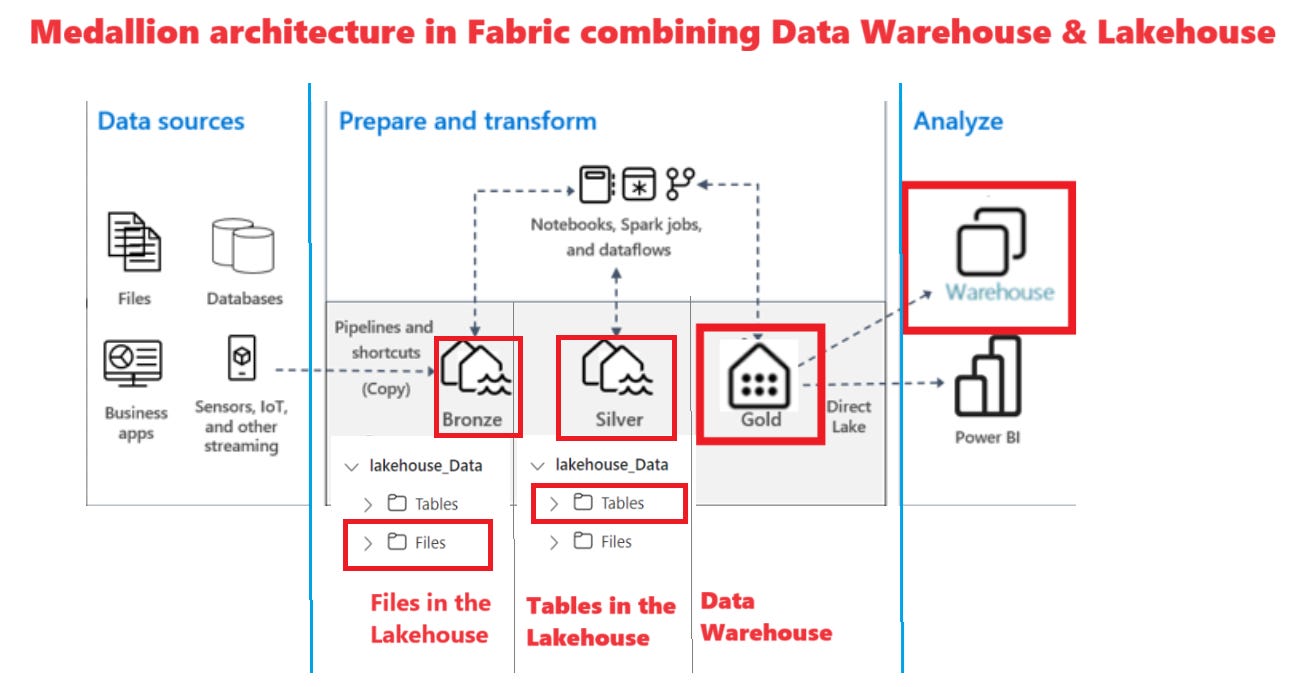

A medallion architecture comprises of three distinct layers or zones: Bronze (raw), Silver (validated), and Gold (enriched).

The medallion architecture is the recommended approach in Fabric. Let's break it down:

Bronze Layer: Ingest raw data into the Lakehouse "Files" section using Fabric pipelines, Dataflows, Shortcuts, or Spark notebooks/jobs, forming the typical Bronze layer.

Silver Layer: Employ data engineering frameworks to clean and store data as Delta Tables in the Lakehouse. This is where enrichment happens, by combining data sources together, transforming the existing data, and cleansing it - forming the typical Silver layer.

Gold Layer: Utilize the Data Warehouse to create the Gold layer, by creating the dimensional model and expose it to BI uses. We can employ CTAS statements (Create Table As Select) to transform data from the Silver layer to the Gold layer in the Data Warehouse.

Gold data is business-ready, with a STAR schema, normalized data, and applied business logic, so the data is ready for consumption

Consumption here will be through the Data Warehouse endpoint, not with the SQL Analytics Endpoint of the Lakehouse as the Gold Lakehouse in this combined architecture is replaced by a Gold Data Warehouse.

Unleashing the Power:

By combining the Lakehouse and Data Warehouse into a single architecture, we get the best of both worlds. This architecture allows the consumption layer (through Power BI or Warehouse endpoint) to leverage Data Warehouse features like DDL/DML support for users/developers at the gold layer and Data Warehouse endpoint for various reporting tools and processes.

Spark is the driving force behind ETL/ELT transformations, ensuring optimal performance and and flexibility of your code.

In essence, it's about achieving synergy—transforming and serving data with precision and efficiency. The future of data architecture is here, offering a seamless blend of SQL and Python capabilities