Load data incrementally from the source to the destination using Dataflow Gen2 :

Load new data incrementally from Azure SQL DB to Fabric Lakehouse via Dataflow Gen2

With Dataflows Gen2, which supports data destinations, we can set up incremental loads in order to only get new data from our sources and append it to our data destination. This enables us to maintain up-to-date reports.

❗Note: It's important to clarify that this method is distinct from incremental refresh.

❗Incremental Refresh support for Dataflows Gen2 is scheduled for Q3 2024.

Configuring the dataflow is straightforward: we simply need to create a dataflow that loads recent data from our source and appends it to our destination. This way, we can keep the data destination up to date with our source data

So, the objective is to load only new data via Dataflows.

To achieve this, we can obtain the latest timestamp, such as the most recent order date or the last order ID. This information allows us to identify the last line loaded into the data destination and use it as a filter when loading data from the source.

Once we have filtered the recent source data, all that's left is to append it to the destination.

In my example, I loaded data from an Azure SQL Database into a Microsoft Fabric Lakehouse.

👇👇The steps I followed to set up incremental load with Dataflow Gen2:

✅Create a Lakehouse as data destination and full load data into it.

✅Create a Dataflow Gen2 to incrementally load the data from the source (Azure SQL Database ) into the Lakehouse

Prepare the data in the destination

These steps detail how to load data from the source (Azure SQL database in my case) to the destination (a Fabric Lakehouse in this example):

From Fabric interface, create a new Lakehouse as data destination

Load the data in the Lakehouse

After creating a lakehouse, we can load data - in any common format - from various sources; including local files, databases, or APIs.

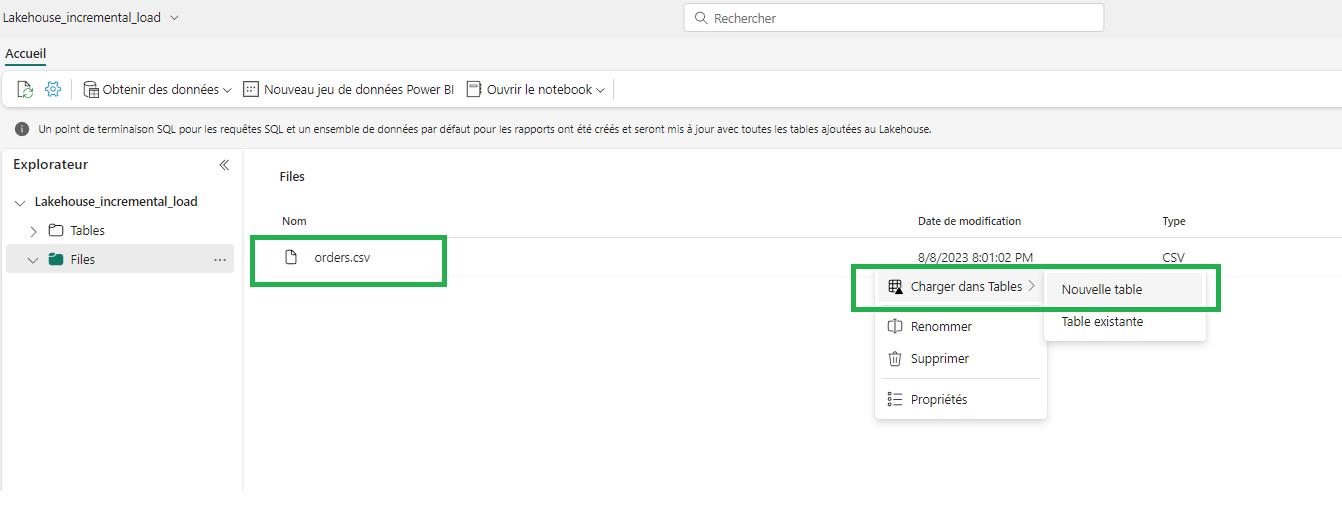

In my case I simply uploaded a file (orders.csv) from my local computer.

❗❗This file is an extract of the data from the Orders table in my Azure SQL database. Alternatively, I suggest you use a Dataflow Gen2 to initially full load the table data from your source database into the lakehouse for the first time.

And later in the article we will create a Dataflow that incrementally loads the data and which will be executed daily.

Load data file into a table

In my case I need to load the data from the file into a table named “Orders”

My Orders table loaded in the lakehouse contains the following columns:

I will then use my Azure SQL database as source to feed my lakehouse table with daily incremental data loading.

Configure an incremental data load with Dataflow Gen2:

Incremental loading of data into a data destination requires a technique that allows only new data to be loaded into the data destination.

These steps detail how to create an incremental load dataflow, that will contain two queries:

👉 The first step involves retrieving the latest Order date from my Lakehouse destination "Orders" table

👉 The second query is designed to filter the data to be loaded from the source, based on the latest Order date

1) Last Order Date query:

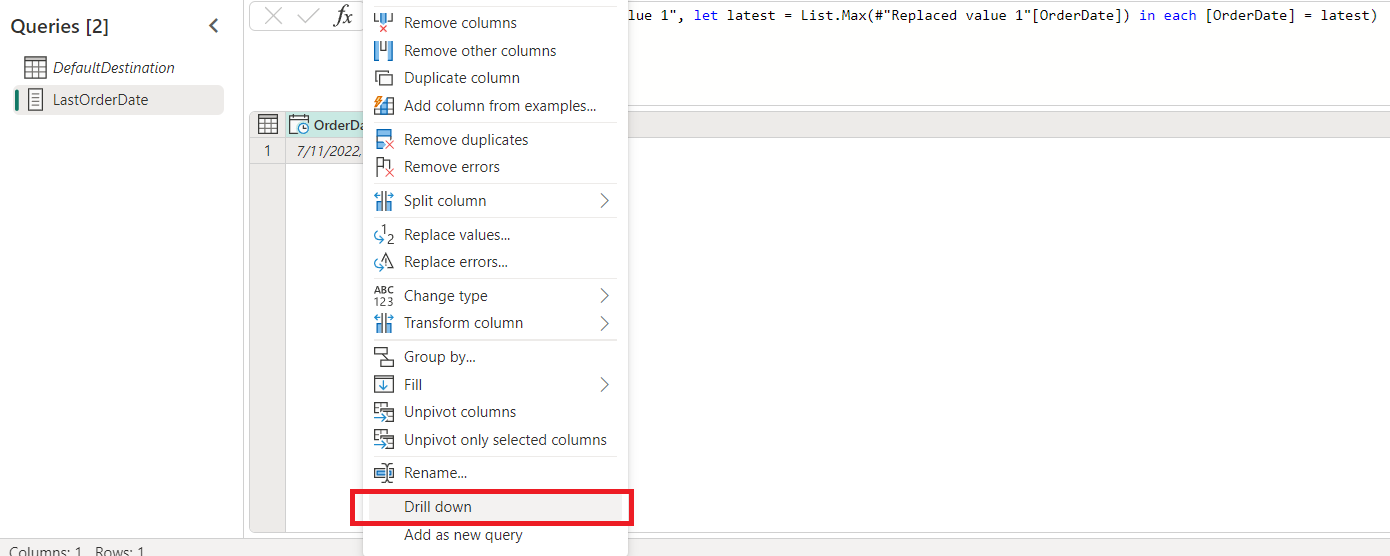

Create a new query named "LastOrderDate" to retrieve data from the "Orders" table in my Lakehouse

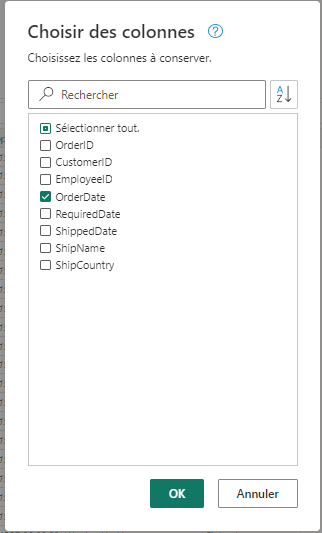

Keep only “OrderDate” column

Filter the column on the latest order date

Right-click on the “OrderDate” column and select Drill Down.

We now have a query that returns the last Order Date from the lakehouse. This query will be used to filter the data from the source.

The second query to be created in this dataflow aims to filter the data from my Azure SQL DB source based on the last order date loaded into the lakehouse.

2) Incremental Load Orders query:

Here, the idea is to create a query that fetches new data from the data source and periodically loads it into the destination. In my example, I will be loading the Orders data daily from Azure SQL DB to the Lakehouse.

Create new M query in the dataflow, by connecting to the SQL DB Orders table, name it “Incremental_Load_Orders” and then select the same columns to be loaded into the destination.

Add a new step to filter the OrderDate column values that are after the “lastOrderDate”

The "incremental_load_Orders" query now includes only the rows corresponding to orders that have been added after the last order date already loaded into the Lakehouse.

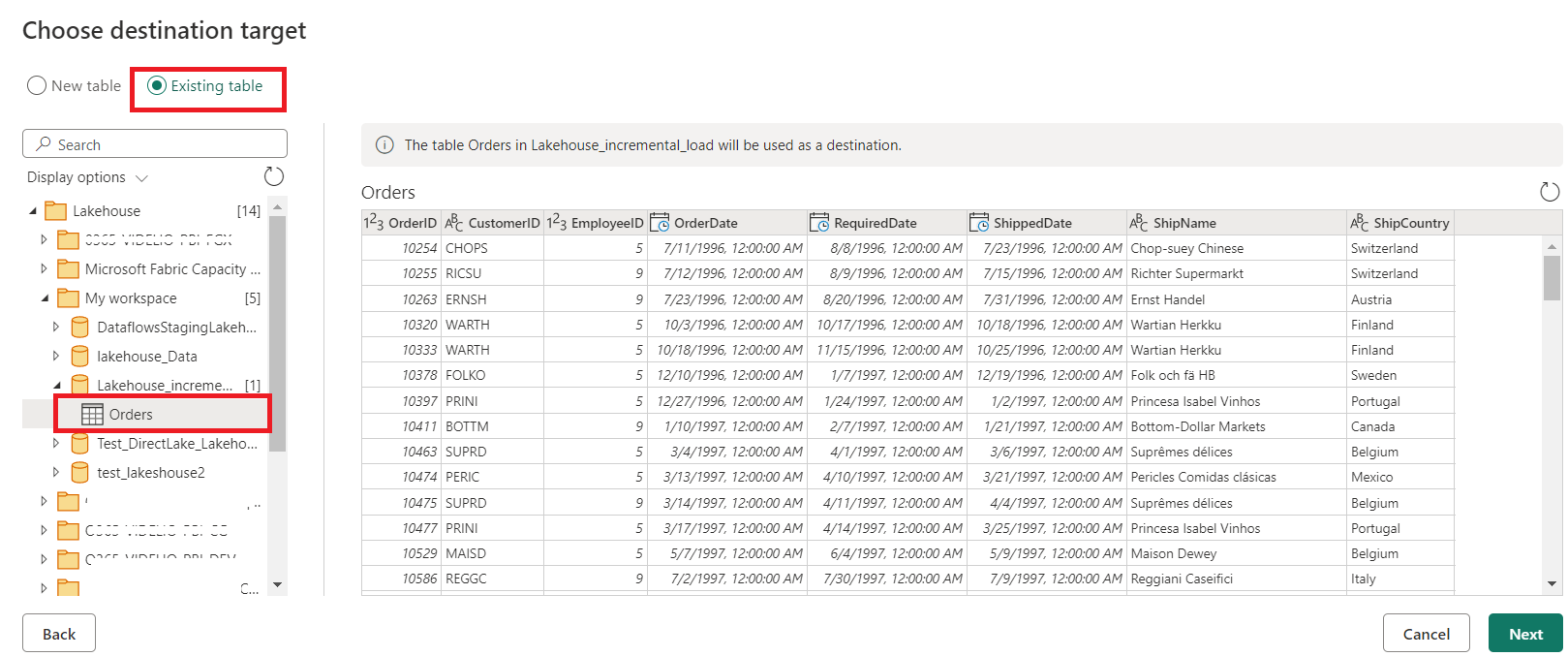

Add a destination to the “incremental_load_Orders” query

Here we need to use the Append method to update the data in the lakehouse destination.

Finally, publish the dataflow

Result:

🎉 Our established dataflow will copy new data from the SQL DB source, starting from the last Order Date that has been loaded into the Lakehouse table, and then append it to the same Lakehouse table destination.

We can then establish a Data Pipeline to automate the execution of this Dataflow on a periodic schedule, ensuring the seamless and timely integration of fresh data from the Azure SQL DB source into the Lakehouse destination. This systematic approach guarantees the maintenance of up-to-date data.

We can also simply configure a scheduled dataflow actualisation in the workspace.

More details in this documentation