Document your Power BI Semantic Model with INFO DAX Functions via the Semantic Link and Store Results in Fabric Lakehouse

I tested how to extract information from a Semantic Model by running INFO DAX functions, creating a comprehensive table and from this data and storting it in Fabric Lakehouse, to automate the generation of documentation for a Semantic Model.

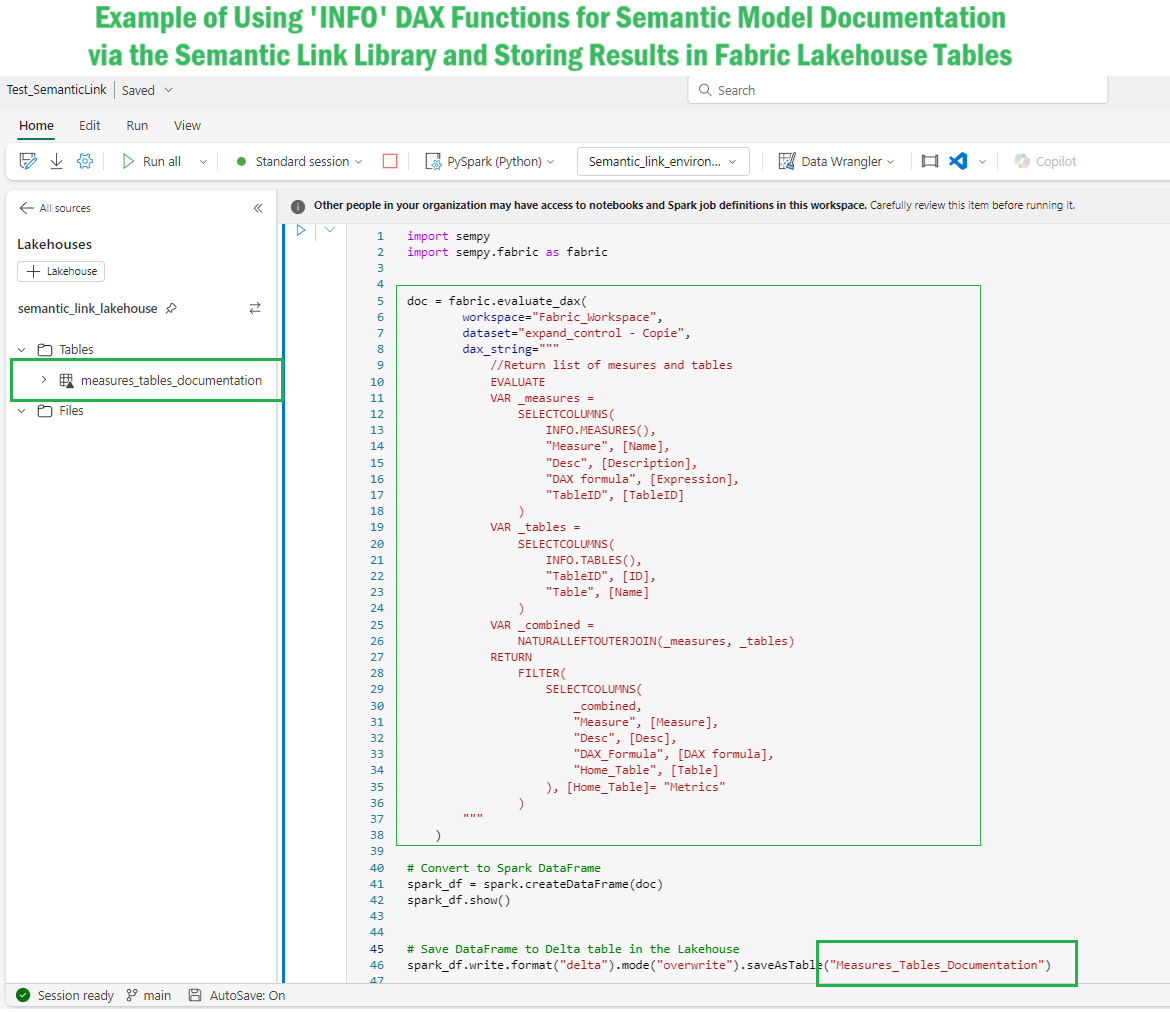

Here’s how I leveraged 𝐈𝐍𝐅𝐎 𝐃𝐀𝐗 𝐅𝐮𝐧𝐜𝐭𝐢𝐨𝐧𝐬 and 𝐒𝐞𝐦𝐚𝐧𝐭𝐢𝐜 𝐋𝐢𝐧𝐤:

1) 𝐄𝐱𝐭𝐫𝐚𝐜𝐭 𝐌𝐞𝐭𝐚𝐝𝐚𝐭𝐚 𝐰𝐢𝐭𝐡 𝐈𝐍𝐅𝐎 𝐃𝐀𝐗 𝐅𝐮𝐧𝐜𝐭𝐢𝐨𝐧𝐬:

INFO DAX functions allow you to extract valuable metadata about your model, including tables, columns, relationships, and measures (using function like INFO.TABLES, INFO.COLUMNS, INFO.RELATIONSHIPS, INFO.MEASURES)

I Created a DAX query that merge information from theses functions, to craft a comprehensive documentation view of the semantic model.

2) 𝐔𝐬𝐞 𝐭𝐡𝐞 𝐒𝐞𝐦𝐚𝐧𝐭𝐢𝐜-𝐋𝐢𝐧𝐤 𝐥𝐢𝐛𝐫𝐚𝐫𝐲 𝐭𝐨 𝐑𝐮𝐧 𝐃𝐀𝐗 𝐜𝐨𝐝𝐞 :

Consider using the Semantic Link library to execute DAX queries within the evaluate_dax() function.

Using the the 𝐒𝐞𝐦𝐚𝐧𝐭𝐢𝐜-𝐋𝐢𝐧𝐤 within a Fabric Notebook, I Integrated the INFO DAX query inside the 𝗲𝘃𝗮𝗹𝘂𝗮𝘁𝗲_𝗱𝗮𝘅() 𝗳𝘂𝗻𝗰𝘁𝗶𝗼𝗻,

3) 𝐒𝐭𝐨𝐫𝐞 𝐭𝐡𝐞 𝐆𝐞𝐧𝐞𝐫𝐚𝐭𝐞𝐝 𝐃𝐀𝐗 𝐐𝐮𝐞𝐫𝐲 𝐑𝐞𝐬𝐮𝐥𝐭𝐬 𝐢𝐧 𝐅𝐚𝐛𝐫𝐢𝐜 𝐋𝐚𝐤𝐞𝐡𝐨𝐮𝐬𝐞:

I stored the generated results in Delta tables inside a Fabric Lakehouse using PySpark code, for future analysis automating fresh documentation.

👏👏Explore the full potential of INFO DAX functions to create detailed Power BI documentation. These functions, combined with the Semantic Link library, offer a powerful way to automate and enrich your Power BI documentation, providing insightful and up-to-date documentation for your semantic models.👏👏

👉Examples using the new 'INFO' DAX functions by Michael Kovalsky: https://lnkd.in/etaHYMUj